Neural Network MIDI generator

An interesting video was brought to my attention while doing some work on other things titled 1500 slot machines walk into a bar. These guys were “hacking” in the purest sense of the term; abusing existing technologies to achieve an end goal.

With this in mind I decided to start looking into how easily I could use a machine to generate music like an automated assembly line, as the thought of having generated assets for small personal games I may or probably may not develop really interests me.

HERE COMES SOME NERD SHIT

Its ok, I too don’t really care how NNs work in an extremely in-depth level. I’m ok with thinking of them as black boxes I throw things into and results spit out. But to put some meat into this post, here is some extremely light theory.

“Schematically, a RNN layer uses a for loop to iterate over the timesteps of a sequence, while maintaining an internal state that encodes information about the timesteps it has seen so far.”

Well, that’s sufficient for me. But here’s some other stuff that irritated me not knowing, so I shall list it.

RNN

- Recurrent neural network.

- Good for predicting things.

- Requires training to learn if it’s given a pattern of X what the most likely next element in the sequence will be.

- Deceptively simple; accepts an input of x and gives an output of y. This is the whole thing, really.

Hidden layer

- Mathematical function your input of x will be passed through. or have slapped on. I don’t remember how math works.

- More hidden layers == more functions being applied.

LSTM

- Long Short-Term Memory

- Type of RNN

- Useful when the network has to ‘remember’ things for a long time, like music or text generation

Steps update the hidden states when called. 1000 steps == 1000 hidden state activations, or the functions being slapped on your input 1000 times.

And that’s about all I care to look into really. This is an insanely in-depth guide; you may enjoy it.

So to start, we need python 3 installed.

It MUST be 3.7; TensorFlow (the one we are using anyway) is not compatible with 3.8.X and this will NOT run under python 2. I cannot say if it works on 3.8.0 or not; I KNOW it runs on 3.7.8 however.

NOTE FROM 2023; magenta will NOT install on pyton 3.10 either, follow the advice I laid out and just stick to 3.7; however you CAN use the latest versions of tensorflow and magenta! I have just produced a fresh batch of 20 tracks to quantize and put into my EP.

I recommend you SCRUB THE SHIT out of your pip installations before starting incase you have some stuff in there that clashes.

pip freeze > requirements.txt

pip uninstall -r requirements.txt -y

pip cache purge

You CANNOT install TensorFlow with pip install TensorFlow as you would expect, you MUST use

pip install --upgrade https://storage.googleapis.com/tensorflow/mac/cpu/tensorflow-1.12.0-py3-none-any.whl

As long as you are 1000% on python 3.7.8, we can use the latest version of tensorflow

pip install tensorflow

Next we install magenta, the tool that was made for researching AI in the creative space (music and images). Pretty much a tool purpose built for this experiment! Once again, the latest versions work fine on 3.7.8

pip install magenta

After installing magenta, our TensorFlow is now broken. fix it with

pip install tensorflow --upgrade --force-reinstall

That does not happen anymore! The future is glorious!

But there is one new step in 2023 that was not required in 2020.

pip uninstall resampy

pip install resampy==0.3.1

The latest version of resampy just refuses to parse tracks. The fix is simply downgrade it. So not 100% seamless but still easier than it was in 2020.

Now our framework is installed. Let’s get some data to feed our hungry hungry program!

This is a repo of over 1000 midi tracks. It proved a good base for this. Get it here

Now we want to turn the midi tracks into a textual representation of the music. Think of it like the sheet music for each of the midi tracks being given to the computer so it can analyse it.

convert_dir_to_note_sequences --input_dir="C:\Users\root\desktop\nintendo_midi" --output_file="C:\Users\root\desktop\nintendo_midi.tfrecord" --recursive

Now we create the training set. The ratio is what percentage of the MIDI tracks will be held as evaluation files; i.e., the NN will learn with 0.9 and the final 0.10 will be what it compares its results with. A helpful, or not, point is made here “Like many other things in machine learning, the train-test-validation split ratio is also quite specific to your use case and it gets easier to make judgement as you train and build more and more models.”

Keep this at 0.10 because it was what worked for me, or change it and see what happens. It seems there is a lot of discussion about 70/30 though. I should do a rerun to see if maybe it learns faster with that ratio.

There is also a chance that it’ll make it learn WORSE and only output garbage, since its dataset will be reduced.

polyphony_rnn_create_dataset --input="C:\Users\root\desktop\nintendo_midi.tfrecord" --output_dir="C:\Users\root\desktop\polyphony_rnn\sequence_examples" --eval_ratio=0.10

RUN IT

polyphony_rnn_train --run_dir="C:\Users\root\desktop\polyphony_rnn\logdir\run3" --sequence_example_file="C:\Users\root\desktop\polyphony_rnn\sequence_examples\training_poly_tracks.tfrecord" --hparams="batch_size=64,rnn_layer_sizes=[128,128,128]" --num_training_steps=1000

The training steps number is how many times it’ll do work. Adjust to vary how long you want it to run. Basically one every two minutes on my rig, give or take. I found runs of 1000 to be good to kick off before bed and have it done when I got home from werk.

a batch size of 64 instead of the default of 128 to reduce memory usage was what was suggested to me when I started, but realistically I have an inordinate amount of ram kindly provided by one of my previous employers in a strange scenario involving basically demanding they provide me an extra 32gb of ram so I could complete some arbitrary certification they wanted me to get that did not require this at all based mostly on a drunken dare from my coworker after we went to the pub for lunch, so I probably shouldn’t have bothered adjusting the batch size. If anything I should increase it for another run just to see what happens.

To make it train faster it was also suggested I use 3 layers of 128 functions, because I wanted results immediately (the default for each layer is 256 functions). I will adjust these to see what happens. Maybe it’ll take a longer time to crunch 1k steps but it’ll require less steps? Hmm. it’s hard to move away from the settings I have in place because I know they do eventually produce results. I cannot remember the name for this sort of bias, but I MUST do more experiments.

MAKE SOME MUSIC

polyphony_rnn_generate --run_dir="C:\Users\root\Desktop\polyphony_rnn\logdir\run3" --hparams="batch_size=64,rnn_layer_sizes=[128,128,128]" --output_dir="C:\Users\root\Desktop\polyphony_rnn\generated" --num_outputs=10 --num_steps=128 --primer_melody="[20]" --condition_on_primer=true --inject_primer_during_generation=false

The –primer_melody argument can be replaced with primer_pitches, for example –primer_pitches=”[67,64,60]” represents a chord with quarter note duration. I myself like to use the primer_melody though; it is analogous to how i formed my understanding of guitar theory, root notes.

–num_steps sets how long the generated track will be; 128 seems to be 30 seconds.

If the condition_on_primer argument is set to true, then the RNN will receive the primer as its input before it begins generating a new sequence. This is useful if you’re using the primer pitches as a chord to establish the key. If inject_primer_during_generation is true, the primer will be injected as a part of the generated sequence. This is useful if you want to harmonize an existing melody.

And voila. After about 8k steps or so it started to sound good. Some of the tracks it generated could easily be shoved into a NES game.

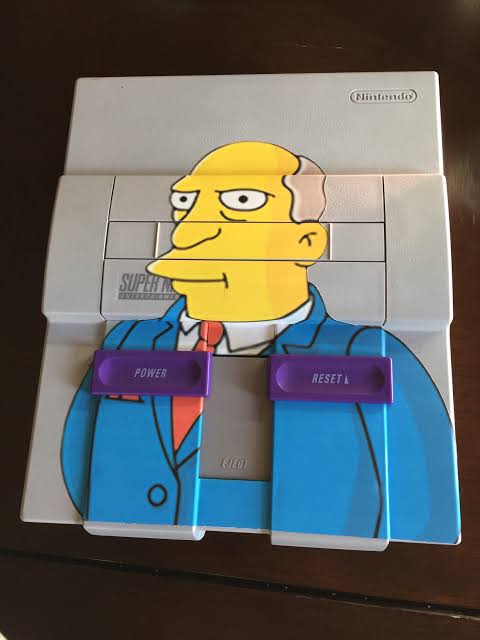

I have run these through a VST called NES VST 1.2 and blended it with FAMISYNTH-II to give it a sort of SNES feel by utilising the extra voice channels available, without going to the extent of setting up something like C700; that plugin WILL sound like SNES since you can drop in SNES instrument samples, but its far beyond the amount of effort I want to apply. Yeah, it doesn’t sound like Chrono Trigger or anything, but I have played plenty of Nintendo games with worse sounds than this.

I will write up an automated workflow for this cause I think it would be cool to have the two work hand in hand; the tracks being generated and automagically being imported into my DAW to have them “transformed” if you like.

I have written up a powershell script that leverages reapers batch rendering function. Linked here

Here are some examples I liked.